Beware of the ESG Risks in These 8 AI Companies

A look at which companies are best positioned to navigate an AI-based future.

Artificial intelligence can be a powerful tool for investors, offering the ability to quickly learn about a topic, sift through reams of data, and provide comparisons faster than most humans. At Morningstar, we’ve developed our own such solution: Mo. And my colleagues have previously highlighted the semiconductor industry as a ripe area for AI-focused investors to consider, and they also recently discussed how AI can help—and hinder—ESG-focused investors.

How the ESG Risk Rating Helps AI Investors

But AI isn’t a panacea. There are perils from the environmental, social, and governance perspectives. To better understand these risks, we can use Sustainalytics’s ESG Risk Rating—and its management component within—to determine which AI leaders in both the semiconductor and software industries are best placed to avoid future controversies.

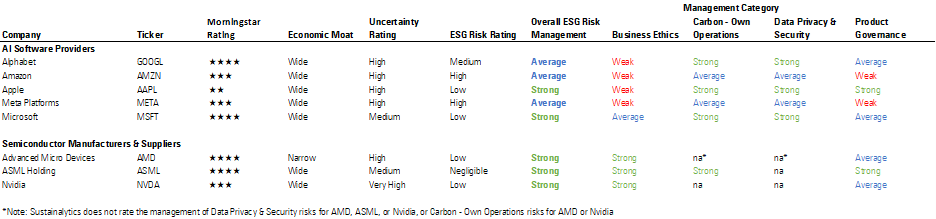

The management of several material ESG issues, or MEIs, in particular can highlight which companies may be best placed to successfully navigate an AI-based future. These material issues include Business Ethics; Carbon—Own Operations; Data Privacy & Security; and Product Governance.

When I also considered valuation, I found that Microsoft MSFT, Advanced Micro Devices AMD, and ASML Holding ASML rise to the top of our AI opportunity list, while Amazon.com AMZN and Meta Platforms META offer greater ESG risk alongside less-attractive current stock prices.

Some Leading AI Companies Are Deemed to Have Better ESG Management Than Others

A couple of caveats: These are assessments, not necessarily predictions. Moreover, AI comprises a very small portion of these firms’ current revenue (and perhaps also their future near-term revenues, too).

Nonetheless, it’s important for AI-focused investors to understand the key ESG risks facing these companies, given the potential for financially material harm to future cash flows if the issues are not properly managed.

The ESG Risks of AI, in Its Own Words

When criticizing someone, it seems only fair to offer a chance to defend themselves. In this case, I was able to ask AI directly about its ESG risks.

To its credit, when prompted, Bing Chat (which uses OpenAI’s GPT-4 technology) does a fairly good job outlining several key ESG risks of AI and linking to relevant articles and research to support.

Question: What are the ESG risks of AI?

Answer (via Bing Chat): AI-related ESG (Environmental, Social, and Governance) risks are a growing concern. Here are some of the key risks:

1. Environmental Impact: AI systems may pose a significant threat to sustainability goals due to the heavy computing power needed to train large neural networks. The carbon footprint from storing and processing data is enormous and growing.

2. Social Risks: AI systems have been found to potentially perpetuate racial inequality and disrupt democratic processes. There are also concerns about whether individuals are treated fairly by AI systems, or whether existing societal inequities are being replicated or even amplified.

3. Governance Risks: A lack of technology skills in both senior management ranks and the general workforce can leave firms vulnerable to mistakes. Issues such as algorithmic bias, job displacement, accountability, data privacy, and reliability are also significant concerns.

Investors and businesses need to be aware of these risks when implementing AI systems and should strive for responsible AI development, procurement, and usage practices.

Focusing on Management of Key AI Company Risks

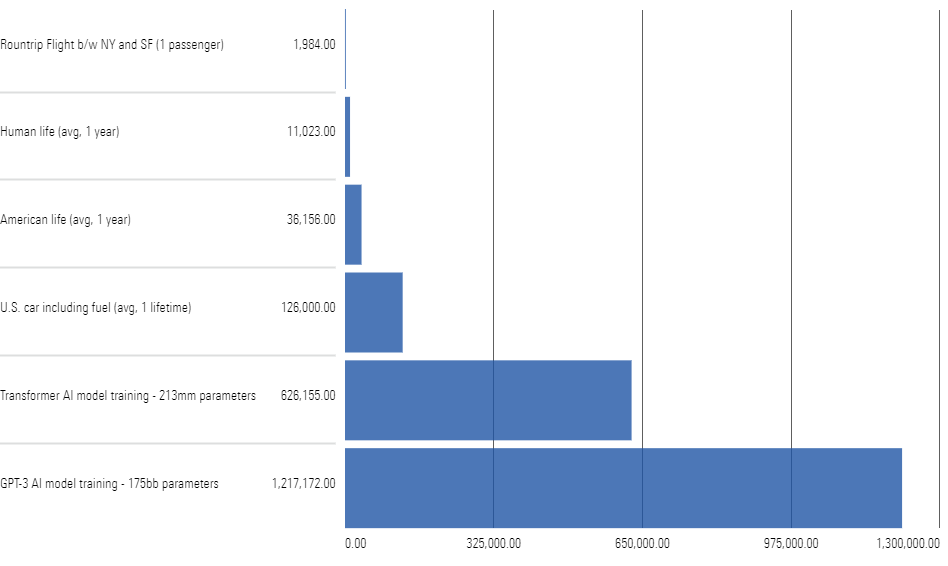

Environmental concerns related to AI arguably rank highest among the pressing ESG issues brought up by Bing—a 2019 study from MIT found that training a large AI model at the time could emit nearly five lifetime emissions of the average U.S. car, for instance. And this figure has since increased: A 2021 study estimated that training OpenAI’s GPT-3 was nearly twice as carbon-intensive.

Training AI Models Is Highly Carbon-Intensive

But Bing Chat also points out social and governance problems such as inherent biases, job displacement, and necessary stewardship by senior leadership at a company.

The management component of Sustainalytics’s ESG Risk Ratings can shed some insight. Sustainalytics assigns firms Weak, Average, or Strong management scores for specific MEIs. For AI, the most-pertinent MEIs that cover the above issues are Business Ethics, Carbon—Own Operations, and Product Governance.

How a company manages its carbon output is an important consideration in our original screen given the energy intensity of AI development. How it manages its business ethics considers governance by the board and management related to issues such as intellectual property, anticompetitive behavior, and incentives to avoid unethical opportunities. And the product governance MEI focuses on several key aspects of product safety, including matters of poorly managed innovation that could lead to mismatches with customer expectations.

Of course, Sustainalytics’ assessments aren’t only confined to artificial intelligence. But companies following best practices related to management of these material ESG issues are arguably best positioned to deal with relevant challenges as AI offerings continue to grow.

AI Threatens Privacy

There is one additional key risk that escapes with only a brief mention by Bing: Data Privacy & Security. As consumers increasingly use AI tools like natural language processing, the privacy and security of personally identifiable data is paramount.

After all, it might be acceptable for this information to be used when a smart home device offers up a tantalizing ad based on a user’s search history, but it’s more problematic when Amazon is storing children’s voices or Apple AAPL is using human transcribers for future algorithm development. Privacy issues go beyond individuals, too: Earlier this year, Samsung engineers accidentally leaked sensitive programming code to ChatGPT when using the tool for error checking.

AI providers have admittedly addressed many of these concerns in recent years, including Apple no longer hiring contractors to listen to Siri conversations. But the world moves quickly. It may be tempting for companies without proper management controls in place to skirt individual users’ privacy concerns in the name of innovation.

These risks have real-world implications. As my colleagues noted in an article last year, the costs of data breaches continue to rise. According to the latest IBM/Ponemon Institute Cost of a Data Breach 2023 report, this cost per breach rose to $4.45 million, up 15.3% from 2020. And regulators will likely continue to look at this space: Under the proposed EU AI Act, companies that violate the data governance provision could face fines of up to 4% of revenue.

Now, responsible AI use can be beneficial; the same IBM study shows that the average cost of a data breach for companies using AI security solutions is nearly 20% lower. But like many new technologies, artificial intelligence can be a double-edged sword. Understanding not only the opportunities but also the key environmental, social, and governance risks is critical for long-term investors in the space.

The author or authors own shares in one or more securities mentioned in this article. Find out about Morningstar’s editorial policies.

/s3.amazonaws.com/arc-authors/morningstar/35091ad9-8fe9-4231-9701-578ec44b5def.jpg)

/cloudfront-us-east-1.images.arcpublishing.com/morningstar/PJQ2TFVCOFACVODYK7FJ2Q3J2U.png)

/cloudfront-us-east-1.images.arcpublishing.com/morningstar/PVJSLSCNFRF7DGSEJSCWXZHDFQ.jpg)

/cloudfront-us-east-1.images.arcpublishing.com/morningstar/NNGJ3G4COBBN5NSKSKMWOVYSMA.png)

:quality(80)/s3.amazonaws.com/arc-authors/morningstar/35091ad9-8fe9-4231-9701-578ec44b5def.jpg)