Quick Thinking Leads to Bad Behavior

Key takeaways from Daniel Kahneman's book, "Thinking Fast and Slow."

A version of this article was published in the December 2018 issue of Morningstar ETFInvestor. Download a complimentary copy of Morningstar ETFInvestor by visiting the website.

Humans are not wired to be good investors. To survive in the wild, we developed a tendency to rely on mental shortcuts to process information quickly, which is helpful for avoiding danger and taking advantage of fleeting opportunities. But this can be a hindrance to the unnatural act of investing.

Psychologist and Nobel laureate Daniel Kahneman explores how people process information and the biases that mental shortcuts can create in his book, “Thinking Fast and Slow.” While the book isn’t specifically about investing, it explains why we often do dumb things as investors, and is well worth a read. Here, I’ll share some of the key insights from the book and how we can learn from them to become better investors.

A Two-Track Mind The book's title refers to two distinct thought-processing systems that everyone has, according to Kahneman: System 1 is the fast track that operates automatically and guides most of what we do. It is intuitive and processes information quickly, with little or no voluntary control, allowing for fast decision-making. The first answer that pops into your head when posed with a difficult question, such as how much money you should save for retirement, is probably from System 1.

System 2 is the slow track. It manages complex problem-solving and other mental tasks that require concentrated effort. This system is deliberate, cautious, and…lazy. Most of the time, we rely on System 1 and engage System 2 only when something surprising occurs or when we make a conscious effort. This division of labor allows us to make the most of our limited attention and mental resources, and it usually works well.

But the shortcuts that System 1 takes can lead to poor investment decisions. Among these, it suppresses doubt, often substituting an easier question for the one that was asked. Also, it evaluates claims not by the quality or quantity of evidence but by the coherence of a story it can create to fit the evidence. To top it off, this system has little understanding of statistics or logic. However, it’s hard to recognize those shortcomings in the moment or even to be aware that System 1 is guiding our actions.

Shortcuts That Can Lead to Trouble Confirmation Bias. The world is full of trade-offs, nuance, and complexity. But that's not conducive to quick thinking. So System 1 paints a picture of the world that is simpler than reality, suppressing doubt. Doubt requires greater effort than belief because it is necessary to reconcile conflicting information. We are biased toward adopting views that are consistent with our prior beliefs or seeking out evidence that supports those beliefs--hence, confirmation bias. This is not only because System 2 is lazy, but also because we have evolved a preference for the comfortable and familiar. In the wild, familiarity is a sign that it is safe to approach; those who aren't cautious of the unknown are more susceptible to danger and less likely to survive.

Yet confirmation bias can lead to bad investment decisions because it can breed overconfidence. For example, it may cause bullish investors to overlook or underweight good reasons not to buy. It may cause others to hold on to bad investments longer than they should, anchoring to their original investment thesis and increasingly attractive valuations while ignoring signs that the fundamentals have deteriorated.

To overcome this potential bias and reduce blind spots, it’s helpful to seek out information that conflicts with what you already believe. If you’re convinced that active management is futile, check out what active shops like Capital Group have to say on the subject. If you’re about to pull money out of stocks because you’re worried about a recession, consider reading what a more bullish commentator, like Jeremy Siegel, has to say first. There’s almost always a good case that could be made for and against any investment, and for every buyer, there’s a willing seller. Before trading, ask yourself, what does the other person know that I don’t? This practice can facilitate a richer understanding of your investments and may reduce the likelihood of overlooking important information.

Question Substitution. It's amazing how many tough questions we can answer with little hesitation. Let's return to the question about how much you should save for retirement. To seriously answer that, it's necessary to estimate future expenses in retirement, expected returns on your investments, life expectancy, and the future state of Social Security. That's all hard System 2 work that few undertake. Instead, most answer an easier question like, How much can I save for retirement? This type of substitution is one of the ways that System 1 enables quick decision-making. It often yields a good-enough answer for the original question--but not always. More troubling, we aren't always aware of this substitution when it takes place.

Investors commonly substitute easy questions (How has this fund manager performed?) for hard ones (Is this manager skilled?). Performance is the result of luck, skill, and risk-taking. The best-performing managers often owe a lot of their success to luck, which is fickle. Past performance alone (over periods longer than a year) is a terrible predictor of future performance. At best, it’s a very noisy proxy for skill. Because the quality of that signal is low, we shouldn’t put much stock in it.

To avoid falling prey to this type of subconscious substitution when the stakes are high, ask yourself, What do I need to know to answer the question that was asked? How would I know if I’m wrong? If it’s hard to tell, proceed with caution.

"What You See Is All There Is." This is a phrase Kahneman frequently uses in his book to describe System 1's tendency to focus solely on the information at hand, failing to account for relevant information that might be missing. It attempts to construct a story with that information to make sense of it and judges its validity based on the coherence of that story rather than on the quality of the supporting evidence.

For example, consider a poor-performing mutual fund that replaces its manager with a star who has a long record of success running a different portfolio at the firm. Three years later, it is among the best-performing funds in its category. That story seems to support the obvious conclusion that the new manager turned things around. But there’s not enough information to know that.

System 1 doesn’t stop to consider alternatives that weren’t presented. For instance, the performance turnaround may not have come from any changes to the portfolio. Rather, after a stretch of underperformance, the holdings the original manager selected may have been priced to deliver better returns going forward. Luck could have also played a role. However, the stories that System 1 creates leave little room for chance, often searching for causal relationships where there are none.

We can do better by asking what other information might be relevant and how reliable the source is, and accounting for chance. The best way to do that is to start with the base rates of the group of which the specific case is a part and adjust from there based on the dependability of the information available about the case. For example, to gauge the likelihood that an active manager will outperform, start with the success rates for that Morningstar Category in the Active/Passive Barometer and make small adjustments to those figures based on what you know about the manager.

Availability Bias. This is the tendency to judge the likelihood of an event based on how easily it is to think of examples. This bias is an extension of System 1's focus on the information at hand and eagerness to answer an easier question. It suggests that perceptions of risk are influenced by recent experiences with losses. After a market downturn, the market feels riskier than it does after a long rally and memories of past losses are more distant. This may even cause the market to sell off more than it should during market downturns, as perceptions of risk change more statistical measures of risk.

While it’s possible to fight this bias by becoming better informed about the relevant statistics, it’s difficult to overcome. Examples that resonate with us, particularly personal experiences, ring louder than abstract statistics. The best we can do is to recognize that innate bias and educate ourselves.

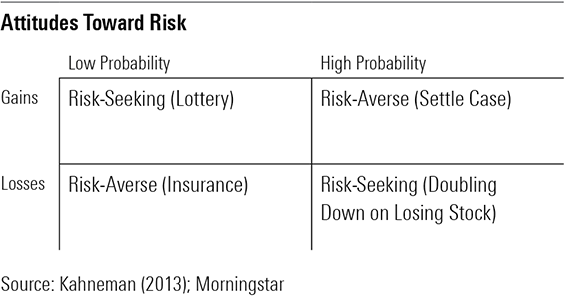

Prospect Theory For most, losing $500 feels worse than the joy that winning $500 brings. Even though such changes have very little impact on most people's wealth, it is natural to fixate on losses. And $500 feels like a bigger deal when going from a $500 loss to a $1,000 loss than it does going from $10,000 to $10,500. System 1 is the source of these feelings, and it automatically evaluates potential gains and losses. Unfortunately, System 1 is not well-suited to evaluate probability. It tends to overweight unlikely outcomes and underweight high-probability events. This behavior is described by Prospect Theory, which makes a set of predictions, as shown in Exhibit 1.

When the likelihood of gains is high, System 1 underweights the value of the probability and it becomes less sensitive to changes in wealth as they increase. This leads to an undervaluation of the bet and risk-averse behavior, where a smaller gain with no risk would be more attractive to most. For example, this may explain why someone with a strong case might settle for less than the expected value of the lawsuit.

When the likelihood of losses is high, these same effects--underweighting the value of the probability and diminishing sensitivity to changes in wealth--lead to risk-seeking behavior. This is the most surprising prediction of Prospect Theory. To someone who is already in the hole, it may seem attractive to take even more risk to get back to even (such as pouring more money into a stock with deteriorating fundamentals) rather than to accept those losses.

System 1 doesn’t do so great with low-probability events either. We tend to think in terms of possibility rather than probability. This leads to risk-seeking behavior in the realm of gains--like buying lottery tickets--and risk-averse behavior when losses are possible, where we are willing to pay more than the expected value of the potential loss to eliminate the risk. This is how insurance companies stay in business.

Think Slow The best remedy for many of the behavioral biases Kahneman highlights is to slow down, think critically, and be skeptical of your first instinct when the stakes are high. Reframing financial decisions more broadly can also facilitate more-prudent risk-taking and behavior. For example, rather than viewing investments in isolation, it's better to focus on the entire portfolio and evaluate potential gains and losses based on their impact on your long-term wealth. That means if you have a long investment horizon, it isn't necessary to lose sleep over market downturns. It's not always easy, but the added effort and restraint should be worth it.

Disclosure: Morningstar, Inc. licenses indexes to financial institutions as the tracking indexes for investable products, such as exchange-traded funds, sponsored by the financial institution. The license fee for such use is paid by the sponsoring financial institution based mainly on the total assets of the investable product. Please click here for a list of investable products that track or have tracked a Morningstar index. Neither Morningstar, Inc. nor its investment management division markets, sells, or makes any representations regarding the advisability of investing in any investable product that tracks a Morningstar index.

/s3.amazonaws.com/arc-authors/morningstar/56fe790f-bc99-4dfe-ac84-e187d7f817af.jpg)

/d10o6nnig0wrdw.cloudfront.net/04-18-2024/t_34ccafe52c7c46979f1073e515ef92d4_name_file_960x540_1600_v4_.jpg)

/d10o6nnig0wrdw.cloudfront.net/04-09-2024/t_e87d9a06e6904d6f97765a0784117913_name_file_960x540_1600_v4_.jpg)

/cloudfront-us-east-1.images.arcpublishing.com/morningstar/T2LGZCEHBZBJJPPKHO7Y4EEKSM.png)

:quality(80)/s3.amazonaws.com/arc-authors/morningstar/56fe790f-bc99-4dfe-ac84-e187d7f817af.jpg)