Replaced by Robots: Imagining the Impact on Labor Markets and Society

Human capital is under threat as robotics and automation increasingly encroach on the labor market.

Technological revolutions have long animated economic history. The concept of "creative destruction"--in which technological advancement destroys certain sectors of the economy while giving rise to new ones--has roots in some of the earliest economic thought.1 This process hinges on the idea that machines serve to supplement human labor, primarily labor dedicated to repetitive physical and cognitive tasks. At the moment, machines can solve intensive well-defined tasks but for the most part cannot be expected to define problems nor identify and traverse particularly complex systems without human oversight.

However, stunning advances in artificial intelligence, or AI, are beginning to allow computers to do things considered impossible just a few years ago. The upshot is that this time it may be different. There's now no logical reason why--at some point in the future--machines cannot do everything humans can and with greater efficiency. This idea, carried to its logical, frictionless conclusion, shows a world in which virtually all labor productivity has been transmitted to capital, and the labor market, as it were, is transformed to a preindustrial, artisan-based economy. The potential impact on wages, returns to education, and the composition of the labor market is profound.

In the meantime, the encroachment of machines on the professional class (e.g., accounting, legal, software development, finance) will significantly have an impact on society and gradually change the composition of the labor market. While the march of technology clearly points in this direction, social and political factors--as always--could act to moderate or derail this altogether. The future, of course, is unknown. But trying to project the future is the key to successfully confronting it.

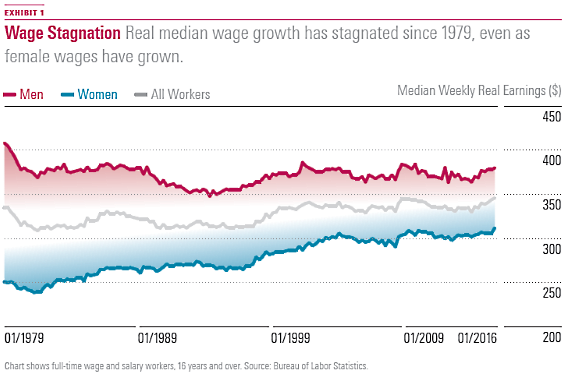

Initial Conditions A central theme of the U.S. presidential election is the displacement of American workers. Fear that the U.S. economy may no longer be able to support a large middle class2 has energized voters. Indeed, a cursory examination (Exhibit 1) shows that real wages for men are lower than they were in 1979 and that average wages have increased only slightly since then. In fact, this mild increase in average wages is largely a function of greater labor force participation of women, who in aggregate are starting out at a much lower base. If and when the two catch up, it will likely be a function of male wages falling as female wages rise.

(click for larger image)

A good deal of real wage stagnation for men is due to the decline in the U.S. manufacturing sector, which has been particularly hard-hit by outsourcing and automation. The effect has been dubbed the "hollowing out" of the middle class and has led to increases in wage growth at the tails of the skill distribution continuum-- those in the lowest- and highest-skilled groups seeing increases in real wages, with those in the middle, a decrease, for the 1980-2005 period (Autor and Dorn, 2013).

Presidential hopefuls Democrat Bernie Sanders and Republican Donald Trump focused rather successfully on this populist message, citing globalization--exporting jobs and excessive immigration--as the primary culprit of this hollowing out. There's little doubt that globalization has created significant dislocation, particularly in the manufacturing sector, and this trend looks unlikely to reverse.

However, globalization can mean a repurposing of labor. Workers in the developed economies move from manufacturing-based jobs such as assembly-line workers and factory workers to jobs requiring more administrative skills needed to power a service-based economy. This isn't costless, as the service-based jobs typically pay far less (in wages and benefits) than those in manufacturing, but it does offer respite from the far worse possibility of mass unemployment with its attendant intermediate-term economic, and long-term social, costs.

To a very large degree, however, the debate over globalization has obscured a much more disruptive cause of worker displacement, as well as declining job and wage security--the increasing substitution of capital for labor. For various reasons, this hasn't really entered the debate. It's difficult to imagine a candidate recommending manufacturers ditch automation for the more labor-intensive methods of yesteryear or that software firms stop developing technology that could replace humans. But this has clearly played a major part in weakening the pricing power and employment opportunities most obviously, though not solely, for blue-collar workers.

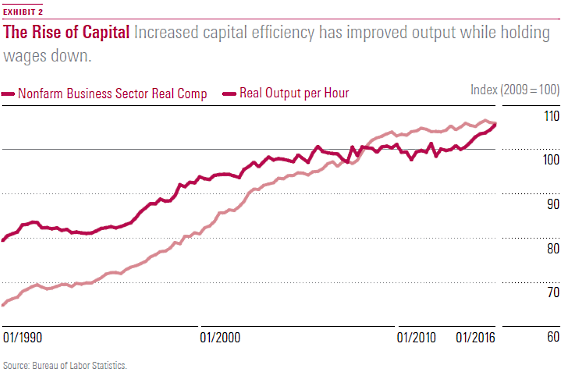

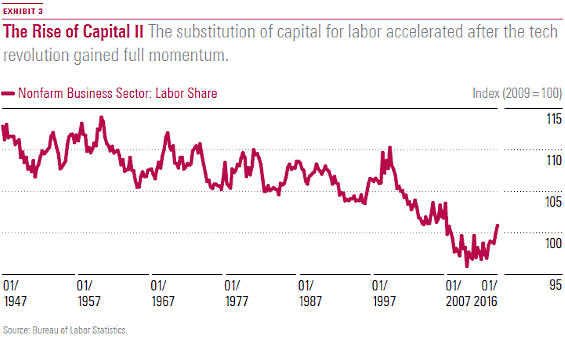

Exhibits 2 and 3 support this point. Exhibit 2 plots worker output and real compensation since 1990. The increase in productivity is largely due to capital augmentation of labor, and the stagnation in real wages is a function of the same phenomenon. People are less important to the production process.

(click for larger image)

In their 2013 paper "The Global Decline of the Labor Share," authors Lukas Karabarbounis and Brent Neiman maintain that since the early 1980s labor's share of income--once considered a constant (Kaldor, 1950)--has been steadily declining globally. They attribute this largely to the decreasing cost of automation--in this context, the substitutability of capital for labor. This trend can be seen for the United States in Exhibit 3 where the trend accelerated once the tech revolution gained momentum.

(click for larger image)

Robot Encroachment Recent years have witnessed once-common professions such as secretary, travel agent, and the nearly obsolete switchboard operator become largely redundant due to technology. Of increasing concern, we're now seeing automation eat into the professional classes. The most obvious example is provided by early robo-solutions such as TurboTax, LegalZoom, and of course, the emergence of robo-advice in investment management. But this was just the beginning.

More recently, we've witnessed the encroachment of robots on the highly specialized and technical field of medicine. In 2013, the U.S. Food and Drug Administration approved a machine to administer the sedative propofol without the presence of an anesthesiologist. Computer-aided diagnosis, or CADx, has become a progressively important part of radiology, with a recent study by the Royal Society of Medicine showing that it outperformed radiologists in identifying radiolucency (the dark spots on an X-ray) by a factor of 10.

More impressive--because it marries finger dexterity with analytical prowess--is the Smart Tissue Autonomous Robot, or STAR, which can stitch up tubular tissue, like blood vessels, with greater accuracy than its human counterpart (Shademan, 2016). This is significant because soft surfaces are substantially more difficult to negotiate than rigid ones, requiring a significantly more involved marriage of mathematics and mechanical refinement than previously thought practical.

There's no reason why at some point in the future computers will not be able to take over other diagnostic and surgical tasks. (Already, robots are assisting surgeons in orthopedics.) Both of these are essentially data processing tasks that require no creativity on the part of the machine. This is unlikely to happen soon, as the complexity of the tasks (the interaction of data processing, analysis, and dexterity) is enormous and the costs associated with improper execution are high. However, this is but a temporary hurdle.

The implications of such stunning advances are simultaneously monumental and opaque. We know this is inexorably transforming our society, but how is not entirely clear.

Two Schools of Thought There are essentially two lines of thought on how things will play out. We'll call one the "Creative Destruction School" in reference to the term popularized by Joseph Schumpeter's "Capitalism Socialism, and Democracy" (1942), in which continued innovation destroys one sector of the economy while creating a host of new jobs and economic opportunities in another.

Such a view is popular as expressed by PayPal co-founder Peter Thiel in the Financial Times (September 2014) in a piece titled, "Robots Are Our Saviours, Not the Enemy." He emphasizes that robots are extremely effective at large-scale data processing but cannot deal with ambiguity. Invoking his former charge, PayPal, he describes how computers were unable to identify fraudulent transactions due to the adaptive ingenuity of fraudsters. However, computers could identify anomalies in given transactions and send them to a human for analysis. This increased pairing of human with machine will cause displacement--you don't need 1,000 people looking over every transaction--but not the wholesale elimination of clerical and analytical jobs.

In a similar vein, the World Economic Forum's 2015 report, "The Future of Jobs," proposed that while there is likely to be significant dislocation over the 2015-20 period (net job loss of 5.1 million mostly white-collar office positions), there would ultimately be the creation of new, currently unnamable jobs. However, it also points to the hollowing out of the administrative white-collar class, just as automation has led to the dislocation of blue-collar workers. By 2020, the WEF predicts the impact of robotics, autonomous transport artificial intelligence, and machine learning as having the biggest impact on the labor force. While the report doesn't do so explicitly, it's easy to extrapolate these impact items into the future and see that they chip away at the job and wage security of the professional class.

This brings us to the second school of thought, which I'll refer to as the "Singularity School," referencing the concept made popular by mathematician and science fiction writer Vernor Vinge (1993)3 of a time when computer IQ exceeds that of human beings such that machines function on an entirely different intellectual plane--one on which human intelligence is too limited to traverse. That this scenario is now well beyond the realm of science fiction shows how close we are to realizing it. This position, advocated by people such as author Andrew Keene and economist George Magnus, paints a grim picture in which things held dear by the professional class, such as education and demonstrated intellect, are priced down by the labor market until they have little if any value. Taken to its logical terminus, this is a world where human capital becomes a near-negligible input to the production process, with machines setting wages and the few owners of machine capital entirely in control.4 If human capital is priced by capital investment and maintenance, we can expect rock-bottom wage rates for everyone regardless of education and native talent.

Both the Creative Destruction School and the Singularity School have merit. The former is supported by economic history; the latter by a body of inductive reasoning that calls into question the merit of projecting the historical record forward. Let's take a look at history in Exhibit 4.

Robots: A Retrospective The most primitive economies are essentially brawn-based. Human labor is largely priced by the ability to perform physical tasks associated with farming and building. A number of studies (e.g., Thomas and Strauss, 1997) show how in modern-day less-developed economies, men make more than woman as a function of body mass and thus perceived brawn, and that men with more brawn made more than those with less. Along the same lines, they found that health status affected earning power for men, but less so for women, whose jobs didn't rely on brawn.

Historically in developing economies, most people worked at brawn-based activities, but others worked as artisans (guild system), blacksmiths, cabinetmakers, tailors, luthiers, and so forth. Although they may have employed apprentices, they were entirely in control of the production process and typically distribution. Importantly, the factors of production were almost entirely "human factors," with physical capital composing a minuscule proportion.

Technology led to changes in the production process itself. Improvements in steam power ushered in the first Industrial Revolution, the inception of which historians place at around 1760. The steam engine served as a direct adjunct to human brawn, which enabled the decomposition of the production process into smaller units. The term "de-skilling" (Braverman, 1974) has been used to describe this process in which labor's skill sets were reduced in breadth and narrowly focused. Now one person carved the wood, another assembled the cabinet, and a third finished it. The improvements in productivity and, for many, quality of life were clear, but not everyone was happy. The threat of unemployment inspired weaver Ned Ludd5 to destroy two stocking machines (weaving machines) and gave rise to the "Luddite" movement and a wave of anti-industrial activity across England.

The second Industrial Revolution is placed at 1870-1914, with increased mechanization of large-scale manufacturing and the refinement and mass adoption of existing technology. It also further embedded "continuous flow" (i.e., assembly line) and batch processing methods. This had the effect of both destroying many jobs but creating a host of new ones as the need for technical and administrative functions replaced those of physical labor, and the increasing atomization of the production process required greater levels of administration and governance. Indeed, the increasing sophistication and dominance of the corporation during this period demanded such jobs. Secretaries, clerks, and accountants became the lifeblood of the industrial economy. However, this period also saw the introduction of the "reproducing" machine, the machine that created other machines.

The most recent revolution is the Information Age or Digital Age and is commonly pinned to the introduction of the semiconductor (transistor) (Bardeen, Brattain, Shockley) in 1947. Now enormous amounts of information could be stored and transmitted quickly, if not instantaneously. The shrinkage of computers from the elephantine ENIAC computer to the mainframe and then the PC enormously increased their effectiveness. Moore's law (1965), which holds that the number of transistors in an integrated circuit doubles about every two years, has held for much of recent history.

Despite the tremendous advancements made during the information age, such innovations served as complements not just to human brawn (if indirectly) but to low-level or rote human intelligence and repetitive cognitive tasks. The term "calculator," in fact, originally referred to a person who literally calculated the answers to long tedious sets of equations that could now be solved in virtually no time on a computer.6

But things change rapidly. Levy and Murnane (2004) argue that the pattern-recognition potential of machines was sufficiently small to place very complex tasks such as driving a car out of reach of computers for the foreseeable future. Not six years later,

7

Thanks to rapid developments in machine learning, or ML, the ability of robots to deal with complex and ambiguous problems continues to improve. In fact, the Morningstar Quantitative Equity Rating is achieved via a "supervised" ML process in which an algorithm trains itself to mimic the behavior of a comparatively small number of analysts so it can rate a large number of stocks. Critically, this approach requires the input of human analysts because the algorithm is "trained on" the output of the analysts. But instead of serving as a mere adjunct, it literally replicates their rating work so that it may be applied to a wider stock coverage area. That said, its presence does increase productivity and lower cost/compensation per analyst labor unit.

Why Now May Be Different A human no longer needs to carefully specify a problem for a robot; the robot can observe human behavior, mimic it, and learn on its own, adapting to new, ambiguous situations. The concept of labor-capital complementarity that defined the whole of economic history is breaking down.

In their widely cited and very readable "The Future of Employment: How Susceptible Are Jobs to Computerisation?" (Frey and Osborne, 2013), the authors construct a model (one that, appropriately, uses machine learning) to estimate the impact of computerization on different jobs in the United States. Critically, they decompose each job into a set of attributes ranging from things such as "finger dexterity" to "persuasion" and "negotiation." Occupations are then defined as bundles of these attributes. Given this and forecasts of change in technology (but holding composition of the workforce static), they predict that 47% of the U.S. workforce is at risk of either lower real wages or unemployment, with another 19% in the medium risk category.

These are not all manufacturing jobs. A good number falling under "sales and support" and "office and administrative support." Disturbingly, "service" jobs are also very much at risk. That these jobs which have (for better or worse) served as the employment of last resort for many are being automated away implies substantial social cost. In the near term, they predict the sort of high-skill/low-skill bifurcation we've seen in recent years. But we're already seeing robots eat into the professional class, and this is nearly certain to continue.

The hollowing-out-of-the-middle-class phenomenon looks to become a wider erosion that will change the labor market as we have come to think of it--this is the singularity.

Revolution or Evolution This all sounds very grave. But it probably isn't feasible, at least from an economic perspective. An economy cannot survive a true singularity. As Benzell, Kotlikoff, LaGarda, and Sachs (2015) emphasize at some point the dominance of robots leads to a choking off of consumption. Say's Law (1802)--that supply creates its own demand--is violated. Robots have no desires, a troublesome characteristic noted in Karel Capek's 1920 play "R.U.R.," which birthed the word "robot." They consume next to nothing, and without consumption, there's no market.

While an "underemployment equilibrium" seems imaginable, the nature of such an equilibrium leads to an interesting thought experiment. In this singularity world of total automation, capital resides in the hands of a few individuals (super capitalists or feudal capitalists). Like all capitalists, they are chiefly concerned with the return on capital, and thus need to maintain a sufficient level of consumer demand and thus agree to tax rates sufficient to provide a subsidy to the now unemployable with the means of consuming some "optimal" amount: the amount that will maximize post-tax return on capital. Critically, this "universal" wage is not in place for purposes of social welfare as in the recent Swiss referendum on basic income (at least not directly), but rather to ensure that the velocity of money remains sufficiently high. With no consumption, there's no return on capital.

And running with this hypothetical scenario, we would have a sizable majority of people with all of their basic needs met, no work, and a huge surplus of free time. This could be a recipe for massive social unrest, but this needn't be the case. With all that free time, people will want to spend their time in things such as traveling, which in turn will create at least some jobs that presumably cannot be handled in a satisfactory and/or cost-effective manner by robots.

A large number of closely owned robots will likely lead to a limit in the variety of goods within product types--particularly commodity product types (like breakfast cereal). In other words, we'll see the disappearance of close substitute goods. Today, for example, a trip to the grocery store will yield several brands of corn flakes, all essentially the same, differentiated if at all by packaging, size, color, ingredients, and levels of vitamin fortification. But in the singularity economy, such differentiation--appealing to small variations in consumer taste--will not be profitable (because consumers themselves won't be differentiated significantly by income like they are today) and will disappear.

But this homogenization of the majority of goods and services is unlikely to cause differences in taste to actually disappear. And so what we can imagine is a re-emergence of the artisan class that provides differentiated products to a small group of interested consumers. As in days of old, this group would be totally in control of the production process and supplying goods to niche recreation-oriented markets for which the feudal capitalists cannot do so efficiently. A re-emergence of a narrowly focused artisan class servicing local markets seems likely.

A true singularity of jobless idle masses is harder to envision than a return to something resembling the preindustrial economies of old.

The implications for the future of human capital are important. Currently marketable skills, such as command-line programming, may be on their way out as computers can increasingly write their own code. Swaths of highly technical--and very well-defined--jobs are, as we've seen, already on the chopping block, and it may be that softer attributes such as persuasion, imagination, empathy, ability to read others, and verbal and nonverbal communication become the overriding determinants of the value of human capital. In fact, we'd argue that the ability to spot a mistake and allow it to point you in a better direction may be something computers will never be able to do. Such things are not uncommon in the arts and to a lesser degree in abstract disciplines such as mathematics. It is challenging to imagine this in a robot economy.

Rethinking how we educate people and what we actually pay for education will be vital. And, of course, none of this is a given as people may collectively decide this is not a direction in which they want to go. Technology is for the moment in the hands of humans, and humans have the ability to put guardrails around technology to protect their interests. The future is unknown and while the singularity economy is imaginable, how we get there in a world without a central government and single economy is a particularly thorny thought experiment. But the broad implications are very real and will increasingly shape how households and businesses invest in people, education, and capital.

Endnotes

1. Though the phrase was popularized by Joseph Schumpeter, the concept can be traced at least back to Adam Smith (1776), David Ricardo, and Karl Marx.

2. The typical image of a large "middle class," with respect to consumption and financial security, was forged at the close of World War II in the United States and is anomalous (in size) relative to history and to other economies.

3. The term was coined by mathematician Stanislaw Ulam in John von Neumann's obituary (1957).

4. Indeed, Karabarbounis and Neiman show that as global labor share has declined, corporate savings and earnings have increased.

5. Ludd, whose real name may have been Ludlam, just barely survives in the historical record and may have acted out of revenge on his employer as opposed to fear of being replaced by a machine.

6. Anyone who's used Microsoft Excel Solver to minimize a function of, say only three variables, should imagine how long it would take to solve by hand.

7. According to the Financial Times, upon hearing that California regulators wanted to mandate that a human sit behind the wheel of a driverless car, representatives from Alphabet, Tesla Motors, and Ford insisted that allowing humans to sit behind the wheel of a driverless vehicle would be too risky. Humans get distracted, use cellphones, get drunk, and do other things that take their mind off the road.

References Autor, D. and Dorn, D. 2013. "The Growth of Low-Skill Service Jobs and the Polarization of the U.S. Labor Market. American Economic Review, 103 No. 5 (August): 1553-1597.

Benzell, S., Kotlikiff, L., LaGarda, G., and Sachs, J. 2015. "Robots Are Us: Some Economics of Human Replacement." NBER Working Paper. No. 20941, National Bureau of Economic Research.

Braverman, H. 1974. Labor and Monopoly Capital: The Degradation of Work in the Twentieth Century (NYU Press).

Duncan, T. and Strauss, J. 1997. "Health and Wages: Evidence on Men and Women in Urban Brazil." Journal of Econometrics 77(1): 159-185.

Kaldor, N. 1957. "A Model of Economic Growth." The Economic Journal. 67(268), 591-624.

Frey, C. and Osborne, M. 2013. "The Future of Employment: How Susceptible Are Jobs to Computerisation?" Oxford University (September).

Karabarbounis, L. and Neiman, B. 2014. "The Global Decline of Labor Share.' The Quarterly Journal of Economics, Vol. 129(1): 61-103.

Levy, F. and Murnane, R.J. 2004. The New Division of Labor: How Computers Are Creating the Next Job Market (Princeton University Press). 13-30.

Shademan, A. et al. 2016. "Supervised Autonomous Robotic Soft Tissue Surgery." Science Translational Medicine, Vol. 8, Iss. 337, P. 64.

Disclosure The Morningstar Investment Management group, a unit of Morningstar, Inc., includes Morningstar Associates, LLC, Ibbotson Associates, Inc., and Morningstar Investment Services, Inc., which are registered investment advisors and wholly owned subsidiaries of Morningstar, Inc. All investment advisory services described herein are provided by one or more of the U.S. registered investment advisor subsidiaries. The Morningstar name and logo are registered marks of Morningstar, Inc. The information, data, analyses, and opinions presented herein do not constitute investment advice; are provided as of the date written and solely for informational purposes only and therefore are not an offer to buy or sell a security; and are not warranted to be correct, complete or accurate. Past performance is not indicative and not a guarantee of future results.

This article originally appeared in the August/September 2016 issue of Morningstar magazine.

/cloudfront-us-east-1.images.arcpublishing.com/morningstar/54RIEB5NTVG73FNGCTH6TGQMWU.png)

/cloudfront-us-east-1.images.arcpublishing.com/morningstar/ZYJVMA34ANHZZDT5KOPPUVFLPE.png)

/cloudfront-us-east-1.images.arcpublishing.com/morningstar/MNPB4CP64NCNLA3MTELE3ISLRY.jpg)