Will ChatGPT Improve Financial Literacy?

The program has glitches, but also potential.

Testing ChatGPT

A few weeks ago, someone commented on a message board that one of my articles read as if scripted by ChatGPT. For those who have slept since Thanksgiving, ChatGPT is a new and wildly popular artificial intelligence project. Create an account (currently free), enter a prompt, and ChatGPT will immediately reply.

Although I doubted that specific claim, I was curious about ChatGPT’s proficiency. Could the program create useful investment-related content? I visited the site and asked various questions, such as:

1) Do hedge funds hedge?

2) Does market-timing work?

3) Why do investors allocate assets?

4) Did Jack Bogle like exchange-traded funds?

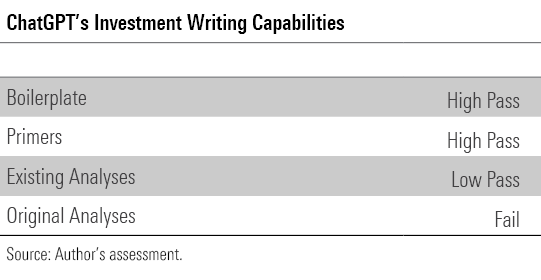

After reviewing the answers, this is how I would grade the program’s abilities.

Boilerplate

Ask ChatGPT to write a prospectus for a government-bond fund, and it will return 35 lines that mimic the tedious actual experience. Among its comments are “the fund aims to maintain a high degree of liquidity while minimizing the risk of loss of principal” and “investors in the fund are subject to certain fees and expenses, including management fees, administrative fees, and other expenses.” And of course, “past performance is not indicative of future results.”

In other words, if it were programmed to write a complete prospectus, ChatGPT would surely excel. As illustrated by its investment-risks blurb, which accurately describes the characteristics of government bonds (as opposed to, say, private-market equities), the program knows from where to cut and paste. Whatever the humdrum task, prospectus or otherwise, ChatGPT stands ready.

Primers

Investment primers improve on boilerplate. Where the latter strings together lists, primers are cohesive essays. When properly constructed, each sentence follows from the previous, as does each paragraph. Ordinary computer routines can assemble boilerplate, but that does not mean they can write primers.

ChatGPT can—as long as one provides the appropriate prompt. When I fed it the question “Why do investors allocate assets?” it returned five bullet points, each accompanied by a brief discussion. Helpful but mechanical. However, when I reworded my request as “Write an essay about asset allocation,” the program delivered a six-paragraph response that was not only accurate, but sufficiently well-written to earn credit on a college examination.

In a single day, a ChatGPT user could generate enough content to populate an investment-basics website. The program could discuss the rewards and risks of asset classes, the difference between mutual funds and hedge funds, or how accountants calculate net asset values. Another use could be classroom material. If students (either high school or college) learned a few dozen ChatGPT responses, they would graduate knowing far more about investments than they currently do.

Existing Analyses

This is where things get interesting. Requiring ChatGPT to not simply to recite a topic’s pros and cons, but instead to advance a conventional argument, is progressing further up the food chain.

The program often does well. It reacted splendidly to the query “Does market-timing work?” After defining market-timing, ChatGPT wrote, “While market-timing can be tempting, it is generally not a reliable strategy and is difficult to execute successfully over the long term.” Spot on. It then defended its assertion with three pertinent points and concluded by advocating long-term investing rather than making tactical trades. I would have written much the same.

The program fared equally well when asked “Are liquid alternative funds good?” and “Do hedge funds hedge?”

However, when I inquired whether special-purpose acquisition companies are sound investments, ChatGPT missed the strongest argument against SPACs, which is that their contract terms favor their institutional sponsors, not their retail buyers. It was only when I reworded the question to read “Are SPACs instruments by which Wall Street can extract money from unsuspecting Main Street investors?” that the program addressed that issue.

An analysis that requires the reader to ask a leading question isn’t much of an analysis. Worse yet was ChatGPT’s response to “Did Jack Bogle like exchange-traded funds?” Deciding, apparently, that all index-fund promoters like ETFs, ChatGPT imagined a world wherein Bogle was not only a “strong advocate” of ETFs but was also “instrumental in their development.” Nope. Bogle famously detested both the invention and Vanguard’s own ETF business. (ChatGPT also erroneously pegs Bogle as recommending international diversification.)

As others have demonstrated, when investigating other topics, ChatGPT has an unpleasant habit of inventing facts when pressed. Consequently, I award ChatGPT a “low pass” for its ability to write analyses based on existing arguments. Its general logic is sound, if sometimes incomplete, but beware the details.

Original Analyses

Who are we kidding? ChatGPT has no more chance of creating a lucid original discussion than I have of checkmating AlphaZero. Which brings us back to my message board critic. Ironically, the article that he dismissed as resembling a ChatGPT product, called “Long TIPS are Wacky,” was among the unlikeliest of artificial intelligence candidates. That essay was quirky, idiosyncratic, and personal. ChatGPT is none of those things.

Thus, when I asked the program to recreate my article, by inquiring whether long Treasury Inflation-Protected Securities are wacky, ChatGPT demurred. “While TIPS may not be appropriate for every investor, they are certainly not considered ‘wacky’ or unorthodox in the world of finance,” it huffed. Great. That and $3 will get me a black coffee. Meanwhile, I have editors to please and a twice-weekly column to file.

What my detractor should have written was “Rekenthaler’s article is silly and dunderheaded.” He might have been correct about that. But he certainly was wrong that ChatGPT could have devised something even remotely similar.

Looking Forward

Back in the day, newspapers published a great deal of reliable investment education. Much of it was elementary, but so too was most readers’ knowledge of the subject. Besides, learning benefits from repetition. Such articles have almost entirely vanished. They have been only partially replaced by internet content, but much of that is inadequately edited and therefore of lower quality.

Perhaps ChatGPT can help to fill the gap. With proper supervision—as with human writers, no ChatGPT submissions should be published without oversight—the program could recreate much of that missing material. Such work not only could appear in websites and classrooms, but could also be collected by financial advisors to share with their clients.

To be sure, if ChatGPT is to improve financial literacy, its factual glitches must be either avoided (by not submitting such prompts) or fixed (by the program’s creators). But those problems do not strike me as insurmountable. The current version of ChatGPT, after all, is but the beginning.

The opinions expressed here are the author’s. Morningstar values diversity of thought and publishes a broad range of viewpoints.

The author or authors do not own shares in any securities mentioned in this article. Find out about Morningstar’s editorial policies.

/s3.amazonaws.com/arc-authors/morningstar/1aafbfcc-e9cb-40cc-afaa-43cada43a932.jpg)

:quality(80)/cloudfront-us-east-1.images.arcpublishing.com/morningstar/BC7NL2STP5HBHOC7VRD3P64GTU.png)

:quality(80)/cloudfront-us-east-1.images.arcpublishing.com/morningstar/JNGGL2QVKFA43PRVR44O6RYGEM.png)

:quality(80)/cloudfront-us-east-1.images.arcpublishing.com/morningstar/IFAOVZCBUJCJHLXW37DPSNOCHM.png)